What is L2 Regularization (L2 = Ridge Regression)?

A regression model that uses the L1 regularization technique is called lasso regression and a model that uses the L2 is called ridge regression.

L2 Regularization, also called ridge regression, adds the “squared magnitude” of the coefficient as the penalty term to the loss function.

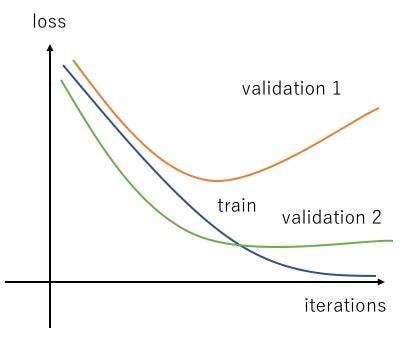

Overfitting happens when the model learns signal as well as noise in the training data and wouldn’t perform well on new/unseen data on which the model wasn’t trained.

To avoid overfitting your model on training data like cross-validation sampling, reducing the number of features, pruning, regularization, etc.

So to avoid overfitting, we perform Regularization.

- The Regression model that uses L2 regularization is called Ridge Regression.

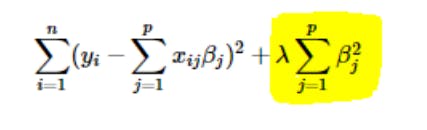

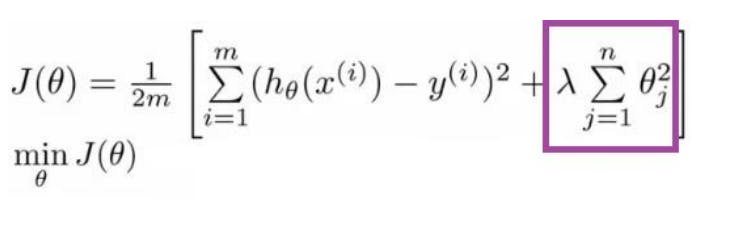

- The formula for Ridge Regression:-

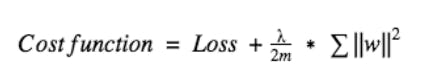

Regularization adds the penalty as model complexity increases. The regularization parameter (lambda) penalizes all the parameters except intercept so that the model generalizes the data and won’t overfit.

Ridge regression adds “squared magnitude of the coefficient" as a penalty term to the loss function. Here the box part in the above image represents the L2 regularization element/term.