What is RESNET?

The winner of ILSRVC 2015, also called Residual Neural Network (ResNet) by Kaiming.

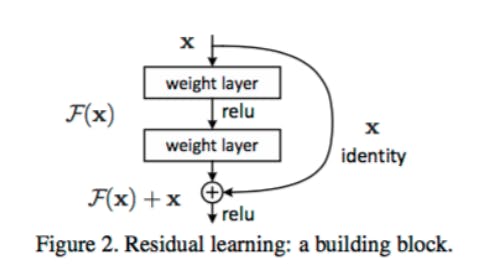

This architecture introduced a concept called “skip connections”.

Typically, the input matrix calculates in two linear transformations with the ReLU activation function. In the Residual network, it directly copies the input matrix to the second transformation output and sums the output in the final ReLU function.

Skip Connection

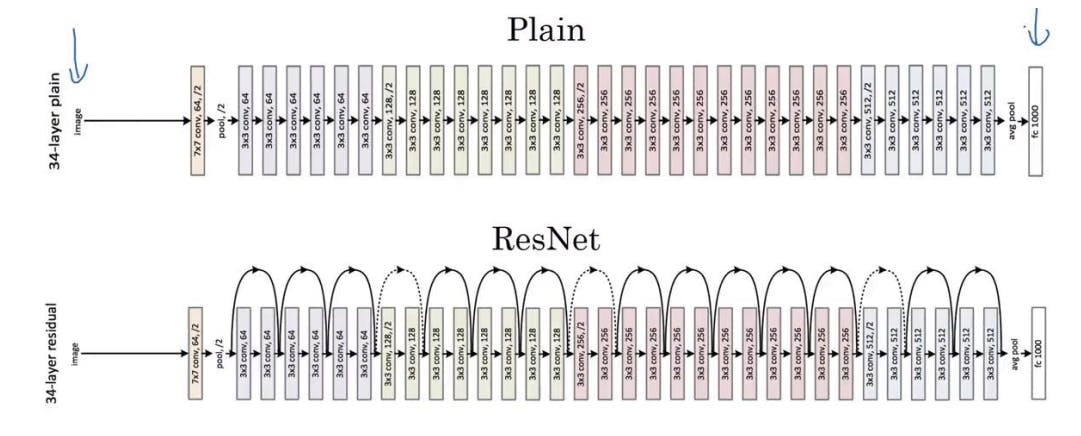

Experiments in paper four can judge the power of the residual network. The plain 34-layer network had high validation errors than the 18 layers plain network.

This is where we realize the degradation problems. And the same 34 layers network when converted to the residual network has much less training error than the 18 layers residual network.